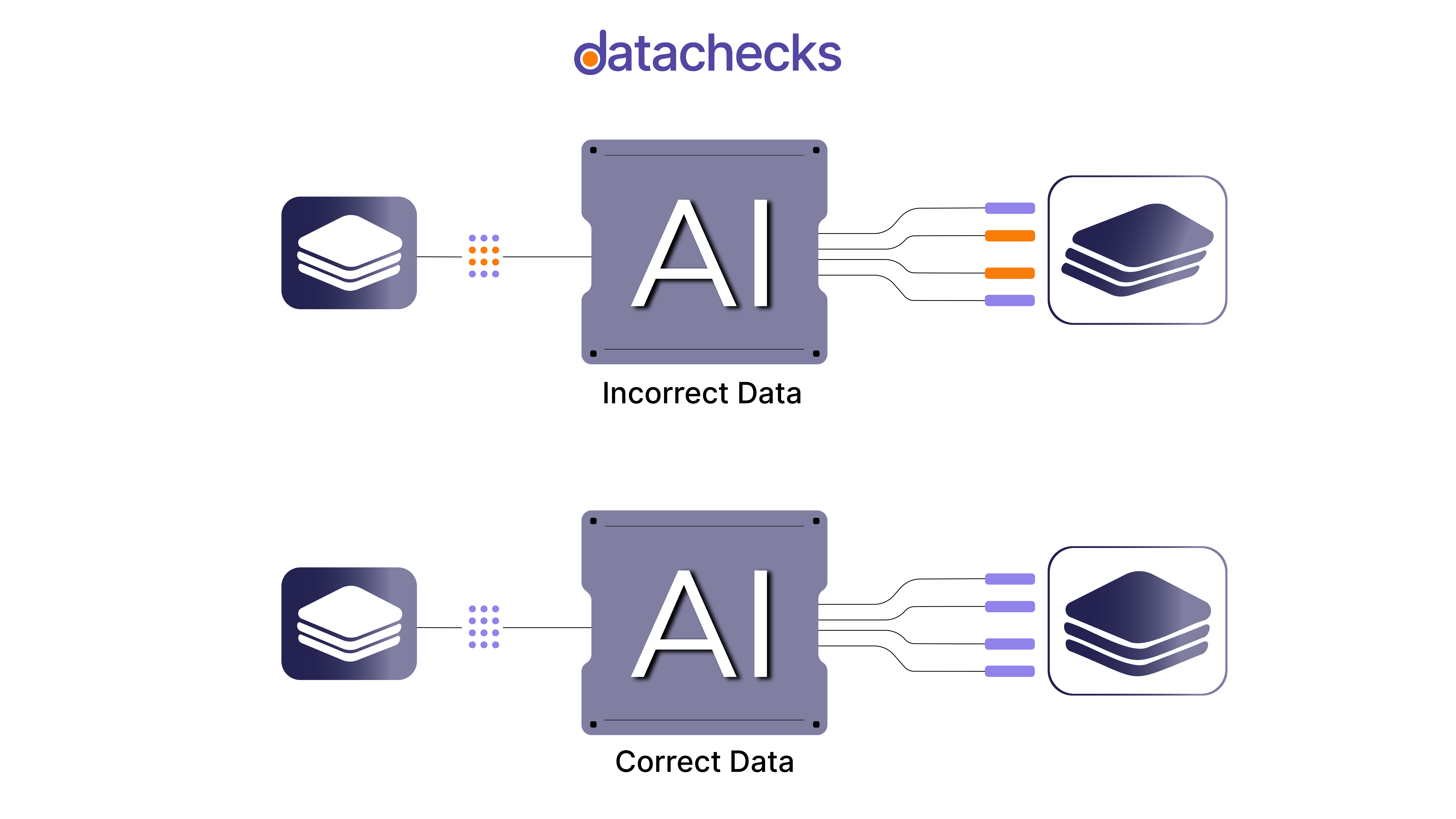

As AI-driven technologies continue to expand, their impact on key sectors like recruitment, healthcare and finance is undeniable. And maintaining fairness, transparency becomes crucial. If the data feeding AI models is biased and flawed, the results will inevitably be biased and potentially discriminatory. To achieve more accurate and unbiased results, quality of this data should be high.

Why Data Quality Matters?

At the core of any AI system is the data that trains its machine learning algorithms. For AI to make fair decisions, the training data must be free from biases that exist in the real world. Poor data quality, such as missing data or biased datasets, can result in AI systems that reinforce existing inequalities or create new ones.

For instance, in automated hiring systems, the exclusion of certain demographic groups or overrepresentation of others during training can lead to discriminatory hiring practices, as the AI will continue to replicate these biases.

A 2023 study of automated hiring systems like HireVue and Knockri found that biased or incomplete data leads to unfair outcomes, particularly when marginalized groups are underrepresented(paper-25). These findings stress the importance of data quality to mitigate bias and ensure fairness in AI systems.

Considerations to Improve Fairness in AI Systems

- Diversity and Representation: Data used to train AI systems must include a diverse range of perspectives and backgrounds. For instance, in hiring, data should represent candidates from different races, genders and ethnic backgrounds to avoid biased AI outcomes.

- Accuracy and Completeness: AI systems must be trained with accurate and complete data. If the training data lacks examples of certain types of fraud or includes mislabeled transactions, the model may fail to recognize new fraud patterns, resulting in financial losses.

- Transparency in Data Collection: AI systems must be transparent about the sources and composition of their training data. If an AI algorithm is used for diagnosing diseases, it is crucial to disclose whether the data used for training includes diverse patient demographics, such as age, gender, ethnicity, and pre-existing conditions

- A study published in the journal Nature found that a widely used AI model for diagnosing skin cancer was trained primarily on images of lighter-skinned individuals, resulting in significantly lower diagnostic accuracy for patients with darker skin tones. A lack of transparency can mask potential biases in the AI model, leading to unequal treatment outcomes for certain patient groups.

The Role of Regulatory in Data Quality

Regulatory frameworks, such as the EU AI Act, emphasize the importance of high-quality data in achieving fairness. The Act proposes strict guidelines for high-risk AI systems, including requirements for data to be accurate, complete, and representative(paper-25). This regulatory push highlights the centrality of data quality in creating ethical and fair AI systems.

Real-World Examples

Consider automated credit scoring systems. If these systems are trained on biased financial data that favors certain demographics, the AI could systematically deny loans to minority groups, even if their financial profiles are otherwise suitable.

Similarly, facial recognition systems trained on predominantly white faces have been found to perform poorly on people of color, resulting in misidentification and wrongful accusations. These examples underscore the centrality of data quality in creating ethical and fair AI systems

Final thoughts

With great power comes great responsibility. AI is opening new doors for us, and its decision-making capabilities are significantly strengthening our possibilities, making processes faster and less error-prone. However, we must be cautious that the data we feed into these models can lead to biased and unfair outcomes if the quality is poor. For AI to be truly fair and trustworthy, organizations must prioritize data diversity, accuracy, and transparency, while also following evolving regulatory standards.

To address these challenges there are data observability platforms available. Investing in strong data governance and continuous monitoring will help mitigate biases and support fairness in AI applications